Last Updated on 10/04/25.

I am a Robotics Engineer at Dexterity focusing on GPU-first Reinforcement Learning Algorithms. I am part of Compute Acceleraton Team, where I focus on accelerated Machine Learning inference and developing custom CUDA kernels for geometric algorithms.

Previously, I got my Ph.D. from Robotics Institute working in the RoboTouch Lab advised by Dr. Wenzhen Yuan and Dr. Ioannis Gkioulekas. The thesis was titled “A Modularized Approach to Vision-based Tactile Sensor Design Using Physics-based Rendering”.

Previously, I completed my masters in Robotics at CMU, advised by Dr. Katerina Fragkiadaki and Dr. Katharina Muelling. I received my B.Tech. in Electrical Engineering from IIT Kanpur.

In my spare time I like contributing to open-source projects, running, hiking and exploring local art.

Find my CV here.

Email: arpit15945 [at] gmail [dot] com

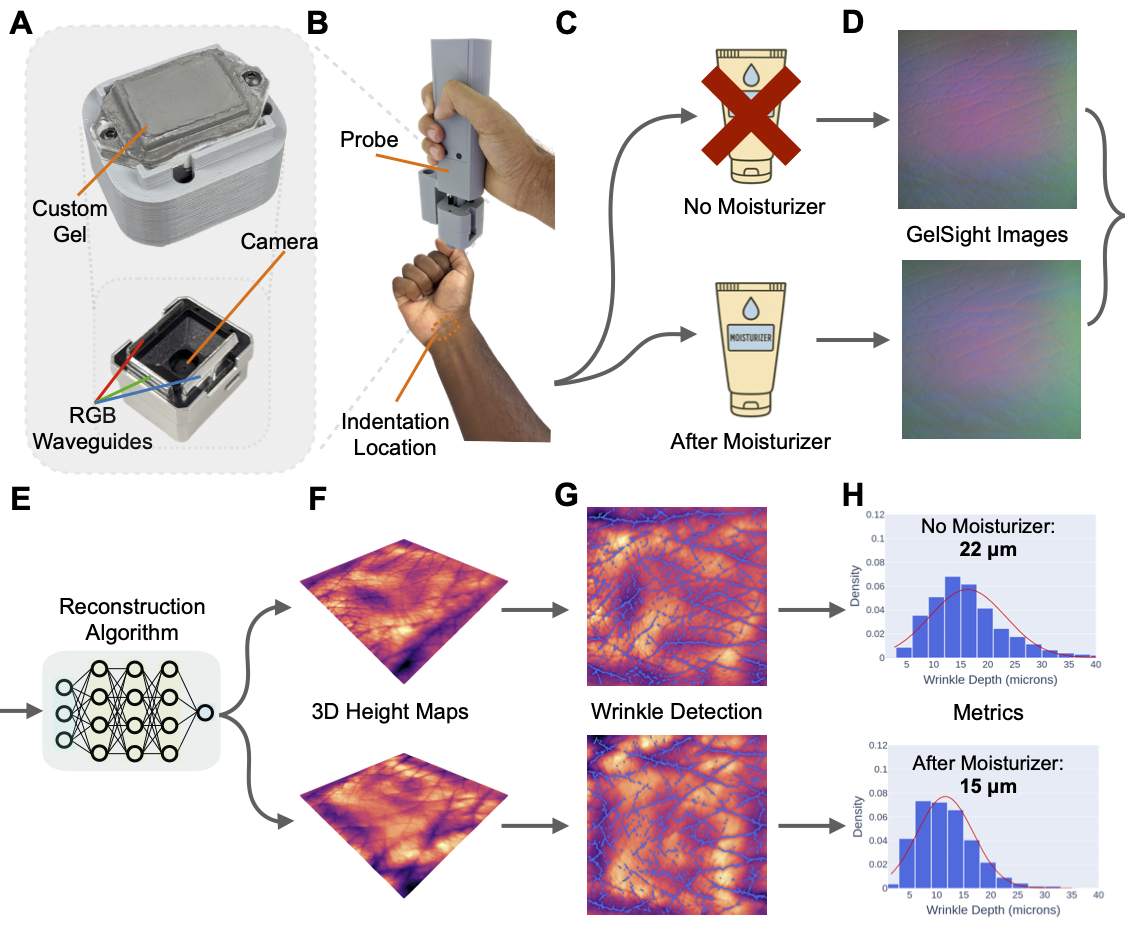

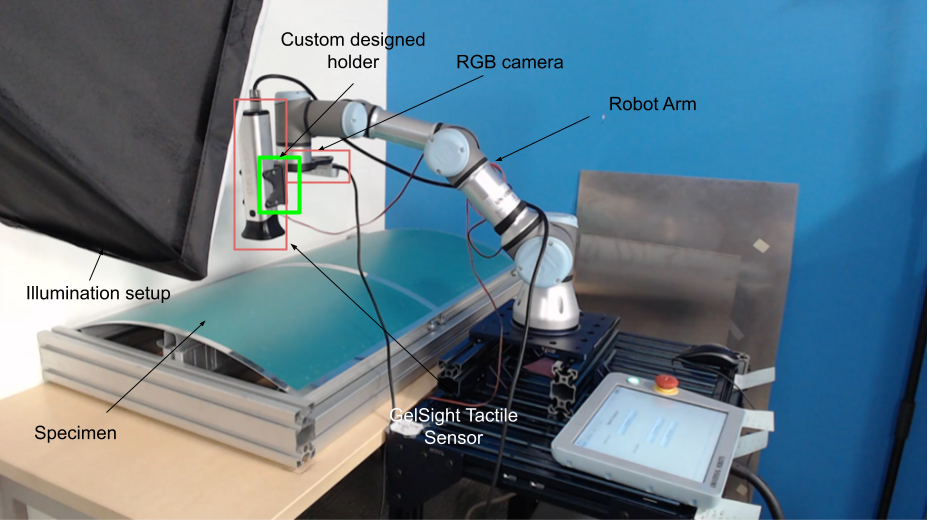

Akhil Padmanabha, Arpit Agarwal, Catherine Li, Austin Williams, Dinesh K. Patel, Sankalp Chopkar, Achu Wilson, Ahmet Ozkan, Wenzhen Yuan, Sonal Choudhary, Arash Mostaghimi, Zackory Erickson, Carmel Majidi PDF

Three-dimensional (3-D) skin surface reconstruction offers promise for objective and quantitative dermatological assessment, but no portable, high-resolution device exists that has been validated and used for depth reconstruction across various body locations. We present a compact 3-D skin reconstruction probe based on GelSight tactile imaging with a custom elastic gel and a learning-based reconstruction algorithm for micron-level wrinkle height estimation. Our probe, integrated into a handheld probe with force sensing for consistent contact, achieves a mean absolute error of 12.55 micron on wrinkle-like test objects. In a study with 15 participants without skin disorders, we provide the first validated wrinkle depth metrics across multiple body regions. We further demonstrate statistically significant reductions in wrinkle height at three locations following over-the-counter moisturizer application. Our work offers a validated tool for clinical and cosmetic skin analysis, with potential applications in diagnosis, treatment monitoring, and skincare efficacy evaluation.

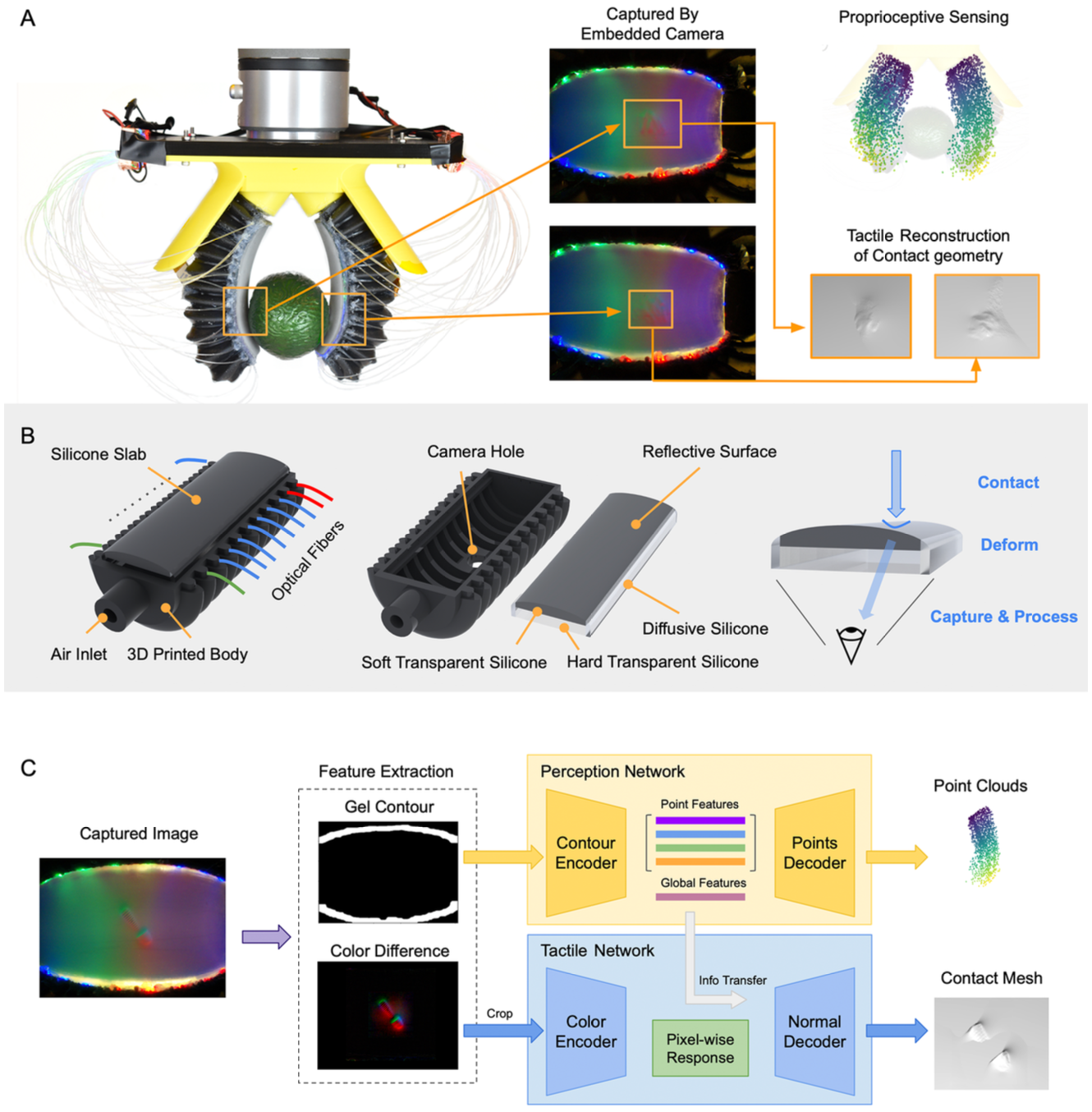

Ruohan Zhang, Uksang Yoo, Yichen Li, Arpit Agarwal, Wenzhen Yuan.

PDF

Soft pneumatic robot manipulators are popular in industrial and human-interactive applications due to their compliance and flexibility. However, deploying them in real-world scenarios requires advanced sensing for tactile feedback and proprioception. Our work presents a novel vision-based approach for sensorizing soft robots. We demonstrate our approach on PneuGelSight, a pioneering pneumatic manipulator featuring high-resolution proprioception and tactile sensing via an embedded camera. To optimize the sensor's performance, we introduce a comprehensive pipeline that accurately simulates its optical and dynamic properties, facilitating a zero-shot knowledge transition from simulation to real-world applications. PneuGelSight and our sim-to-real pipeline provide a novel, easily implementable, and robust sensing methodology for soft robots, paving the way for the development of more advanced soft robots with enhanced sensory capabilities.

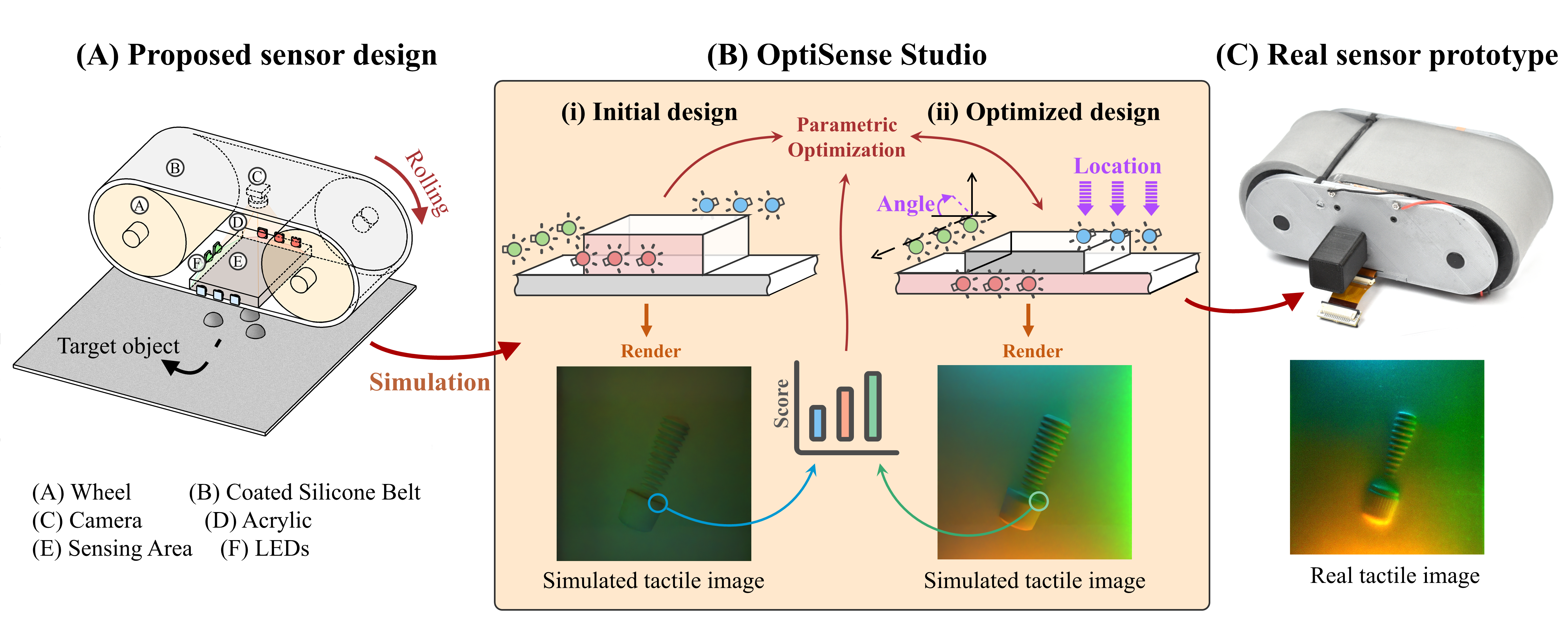

Arpit Agarwal, Mohammad Amin Mirzaee, Xiping Sun, Wenzhen Yuan.

PDF

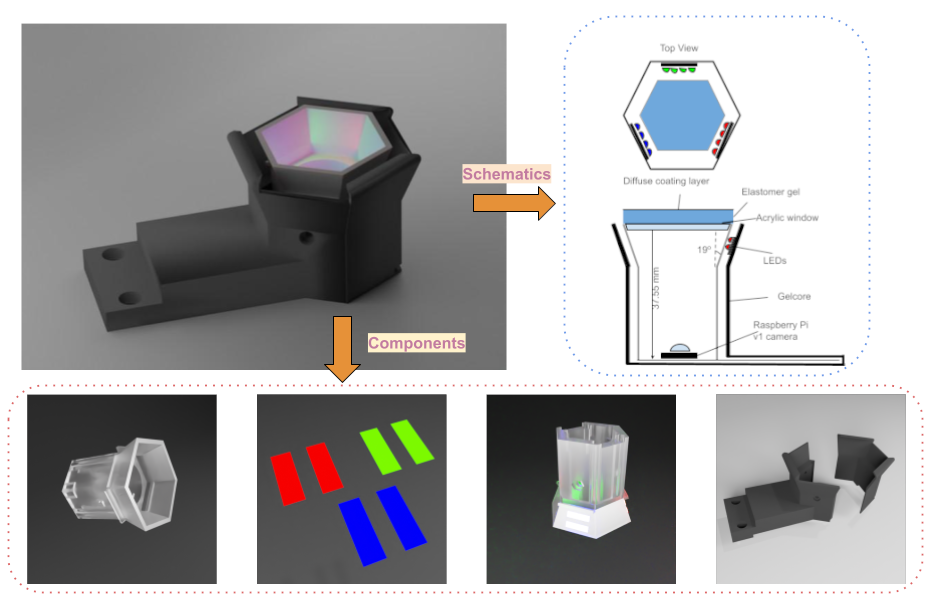

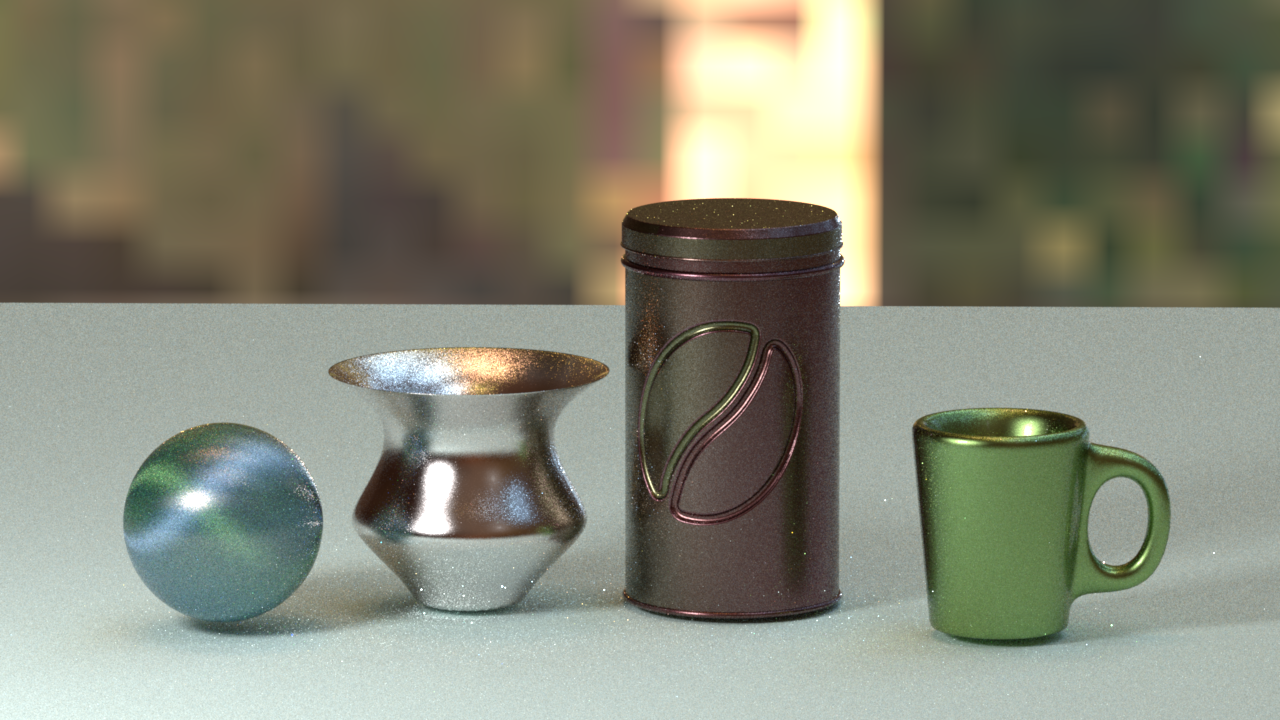

GelSight family of vision-based tactile sensors has proven to be effective for multiple robot perception and manipulation tasks. These sensors are based on an internal optical system and an embedded camera to capture the deformation of the soft sensor surface, inferring the high-resolution geometry of the objects in contact. However, customizing the sensors for different robot hands requires a tedious trial-and-error process to redesign the optical system. In this paper, we formulate the GelSight sensor design process as a systematic and objective-driven design problem and perform the design optimization with a physically accurate optical simulation. The method is based on modularizing and parameterizing the sensor’s optical components and designing four generalizable objective functions to evaluate the sensor. We implement the method with an interactive and easy-to-use toolbox called OptiSense Studio. With the toolbox, non-sensor experts can quickly optimize their sensor design in both forward and inverse ways following our predefined modules and steps. We demonstrate our system with four different GelSight sensors by quickly optimizing their initial design in simulation and transferring it to the real sensors.

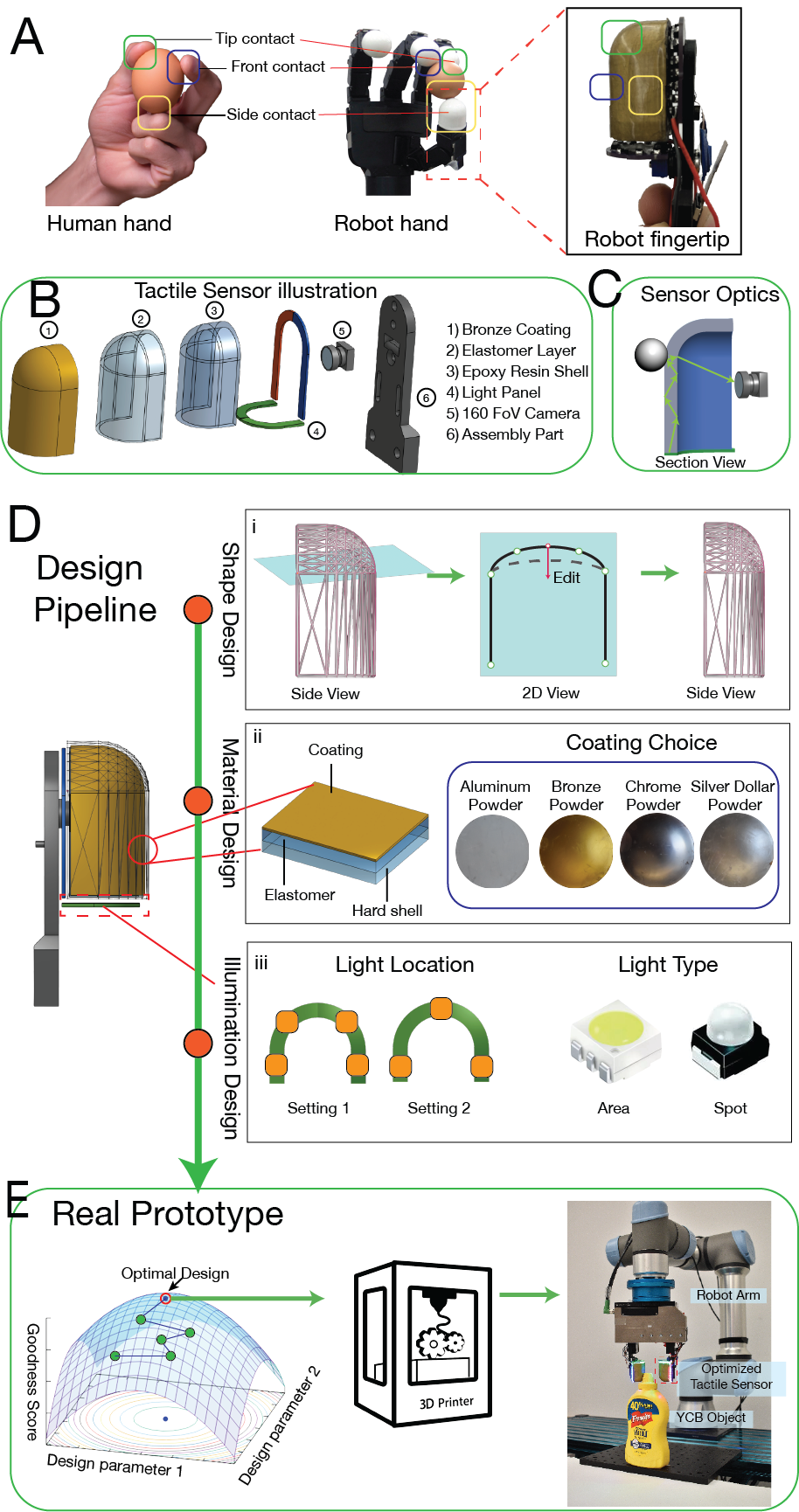

Arpit Agarwal1, Achu Wilson1, Timothy Man1, Edward Adelson3, Ioannis Gkioulekas1 and Wenzhen Yuan2

Affiliations: 1 - Carnegie Mellon University, 2 - UIUC, 3 - MIT

High-resolution tactile sensors are very helpful to robots for fine-grained perception and manipulation tasks, but designing those sensors is challenging. This is because the designs are based on the compact integration of multiple optical elements, and it is difficult to understand the correlation between the element arrangements and the sensor accuracy by trial and error. In this work, we introduce the first-ever digital design of vision-based tactile sensors using a physically accurate light simulator and machine intelligence. The framework modularizes the design process, parameterizes the sensor components, and contains an evaluation metric to quantify a sensor's performance. We quantify the effects of sensor shape, illumination setting, and sensing surface material on tactile sensor performance using our evaluation metric. For the first time, to our knowledge, the proposed optical simulation framework can replicate the tactile image of the real sensor prototype. Using our approach we can substantially improve the design of a fingertip GelSight sensor. This improved design performs approximately 5 times better than previous state-of-the-art human-expert design at real-world robotic tactile embossed text detection. Our simulation approach can be used with any vision-based tactile sensor to produce a physically accurate tactile image. Overall, our approach maps human intelligence to machine intelligence for the automatic design of sensorized soft robots and opens the door for tactile-driven dexterous manipulation.

Yuxiang Ma+3, Arpit Agarwal+1, Sandra Liu+3, Wenzhen Yuan2, and Edward Adelson3

Affiliations: 1 - Carnegie Mellon University, 2 - UIUC, 3 - MIT

+ Authors with equal contributions

ArXiv |

Video

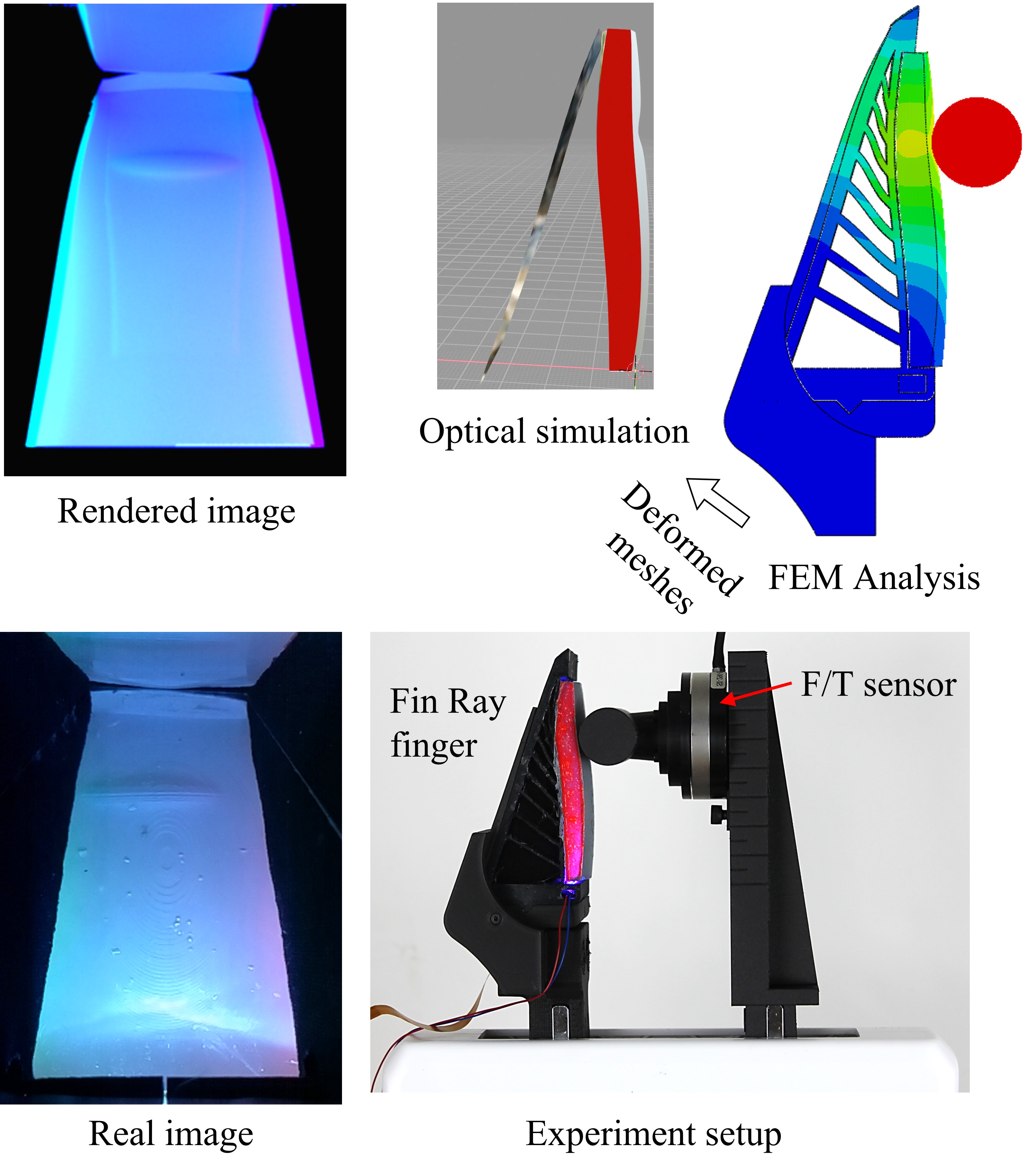

Compliant grippers enable robots to work with humans in unstructured environments. In general, these grippers can improve with tactile sensing to estimate the state of objects around them to precisely manipulate objects. However, co-designing compliant structures with high-resolution tactile sensing is a challenging task. We propose a simulation framework for the end-to-end forward design of GelSight Fin Ray sensors. Our simulation framework consists of mechanical simulation using the finite element method (FEM) and optical simulation including physically based rendering (PBR). To simulate the fluorescent paint used in these GelSight Fin Rays, we propose an efficient method that can be directly integrated in PBR. Using the simulation framework, we investigate design choices available in the compliant grippers, namely gel pad shapes, illumination conditions, Fin Ray gripper sizes, and Fin Ray stiffness. This infrastructure enables faster design and prototype time frames of new Fin Ray sensors that have various sensing areas, ranging from 48mm x 18mm to 70mm x 35mm. Given the parameters we choose, we can thus optimize different Fin Ray designs and show their utility in grasping day-to-day objects.

Arpit Agarwal1, Abhiroop Ajith2, Chengtao Wen2, Veniamin Stryzheus3, Brian Miller3, Matthew Chen3, Micah K. Johnson4, Jose Luis Susa Rincon2, Justinian Rosca2 and Wenzhen Yuan1

Affiliations: 1 - Carnegie Mellon University, 2 - Siemens Corporations, 3 - Boeing, 4 - GelSight Inc.

PDF |

Dataset

In manufacturing processes, surface inspection is a key requirement for quality assessment and damage localization. Due to this, automated surface anomaly detection has become a promising area of research in various industrial inspection systems. A particular challenge in industries with large-scale components, like aircraft and heavy machinery, is inspecting large parts with very small defect dimensions. Moreover, these parts can be of curved shapes. To address this challenge, we present a 2-stage multi-modal inspection pipeline with visual and tactile sensing. Our approach combines the best of both visual and tactile sensing by identifying and localizing defects using a global view (vision) and using the localized area for tactile scanning for identifying remaining defects. To benchmark our approach, we propose a novel real-world dataset with multiple metallic defect types per image, collected in the production environments on real aerospace manufacturing parts, as well as online robot experiments in two environments. Our approach is able to identify 85% defects using Stage I and identify 100% defects after Stage II.

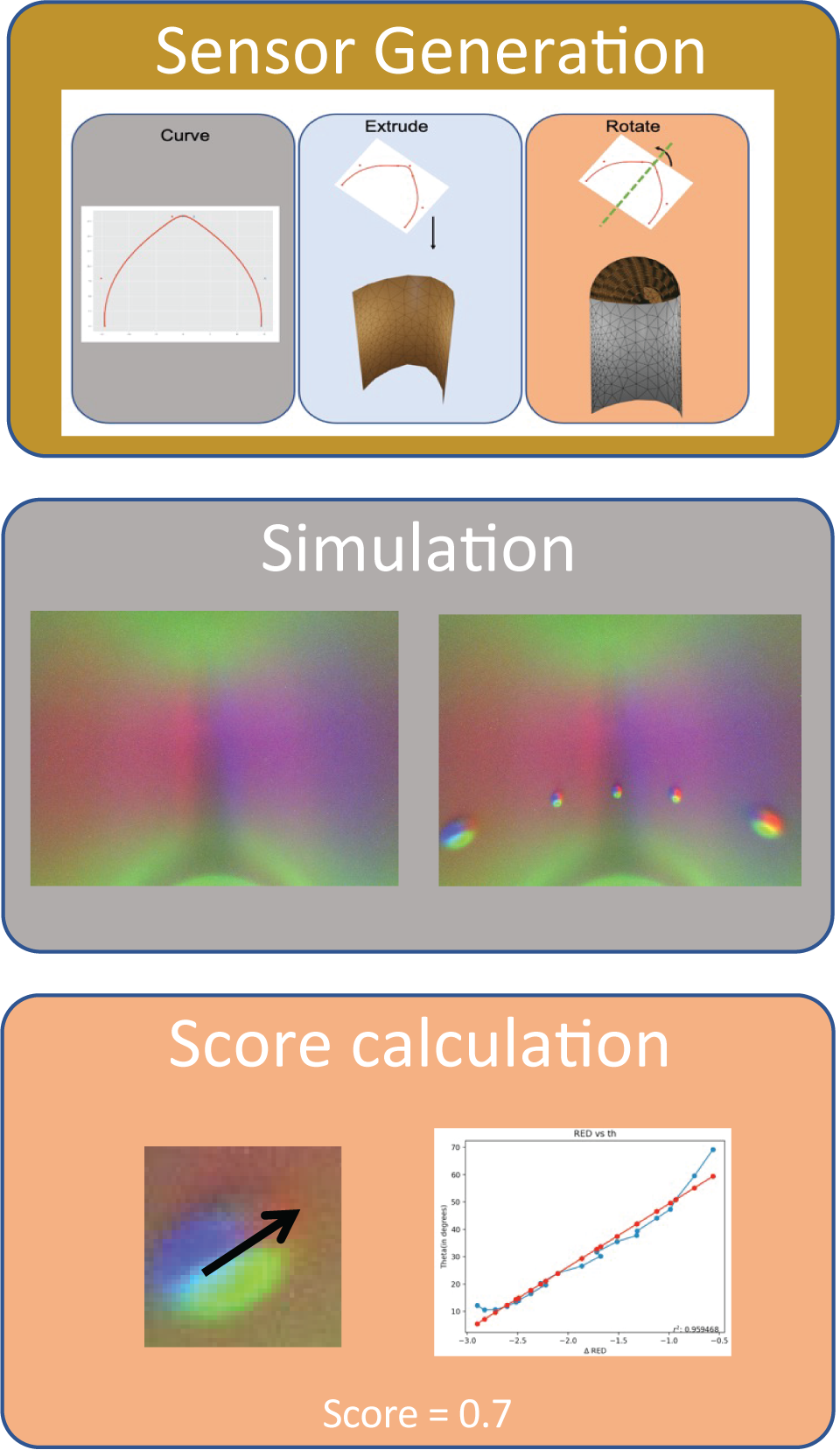

Arpit Agarwal, Timothy Man, Edward Adelson, Ioannis Gkiolekas, Wenzhen Yuan.

PDF

We leverage physics-based rendering techniques to simulate the light transport process inside curved vision-based tactile sensor designs. We use physically-grounded models of light and material in our simulation. We also use lightweight and fast calibration methods for fitting the analytical models of light and material for our prototype. Given a calibrated simulation framework, we propose a tactile sensor shape optimization pipeline. Towards this goal, we propose a low-dimensional tactile sensor shape parameterization and automatically generate the full sensor prototype and an indenter surface virtually, which allows us to validate the sensor performance across the sensor surface. Our main technical results include a) accurately matching RGB image between simulation and a physical prototype b) generating improved tactile sensor shapes c) characterizing the design parameter space using appropriate light piping metrics. Our physically accurate simulation framework offers the ability to generate accurate RGB images for arbitrary vision-based tactile sensors. The parameter space exploration gives us high level guidelines on the design of tactile sensors for specific applications. Lastly, our system allows us to characterize the various tactile sensor designs in terms of their 3D shape reconstruction ability on different parts of the sensor surface.

Arpit Agarwal, Tim Man, Wenzhen Yuan.

Conference Version |

Extended Version |

project page |

code

Tactile sensing has seen a rapid adoption with the advent of vision-based tactile sensors. Vision-based tactile sensors provide high resolution, compact and inexpensive data to perform precise in-hand manipulation and human-robot interaction. However, the simulation of tactile sensors is still a challenge. In this paper, we built the first fully general optical tactile simulation system for a GelSight sensor using physics-based rendering techniques. We propose physically accurate light models and show in-depth analysis of individual components of our simulation pipeline. Our system outperforms previous simulation techniques qualitatively and quantitative on image similarity metrics.

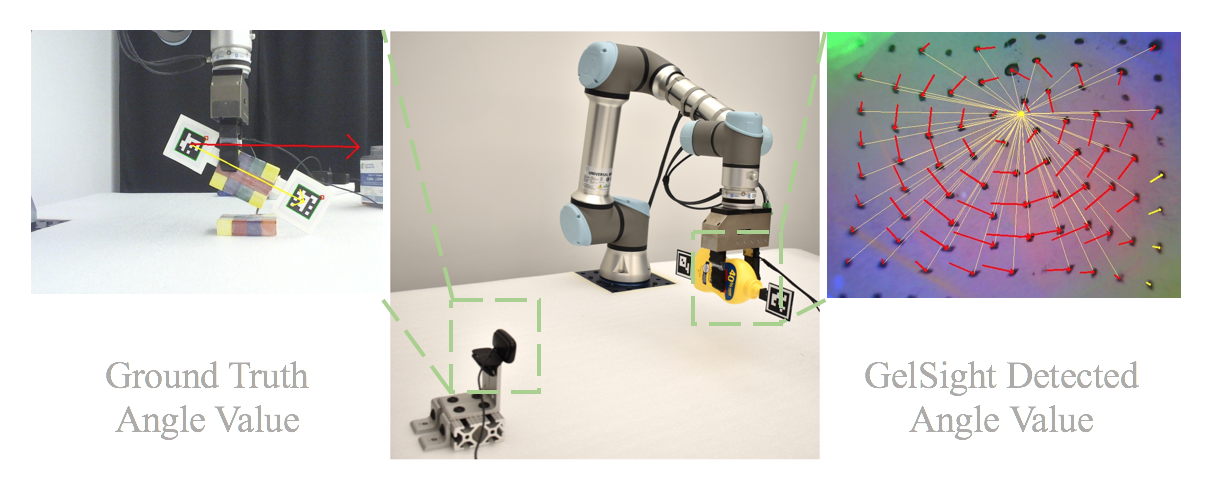

Raj Kolamuri, Zilin Si, Yufan Zhang, Arpit Agarwal, Wenzhen Yuan.

PDF |

project page

Rotational displacement about the grasping point is a common grasp failure when an object is grasped at a location away from its center of gravity. Tactile sensors with soft surfaces, such as GelSight sensors, can detect the rotation patterns on the contacting surfaces when the object rotates. In this work, we propose a model-based algorithm that detects those rotational patterns and measures rotational displacement using the GelSight sensor. We also integrate the rotation detection feedback into a closed-loop regrasping framework, which detects the rotational failure of grasp in an early stage and drives the robot to a stable grasp pose. We validate our proposed rotation detection algorithm and grasp-regrasp system on self-collected dataset and online experiments to show how our approach accurately detects the rotation and increases grasp stability.

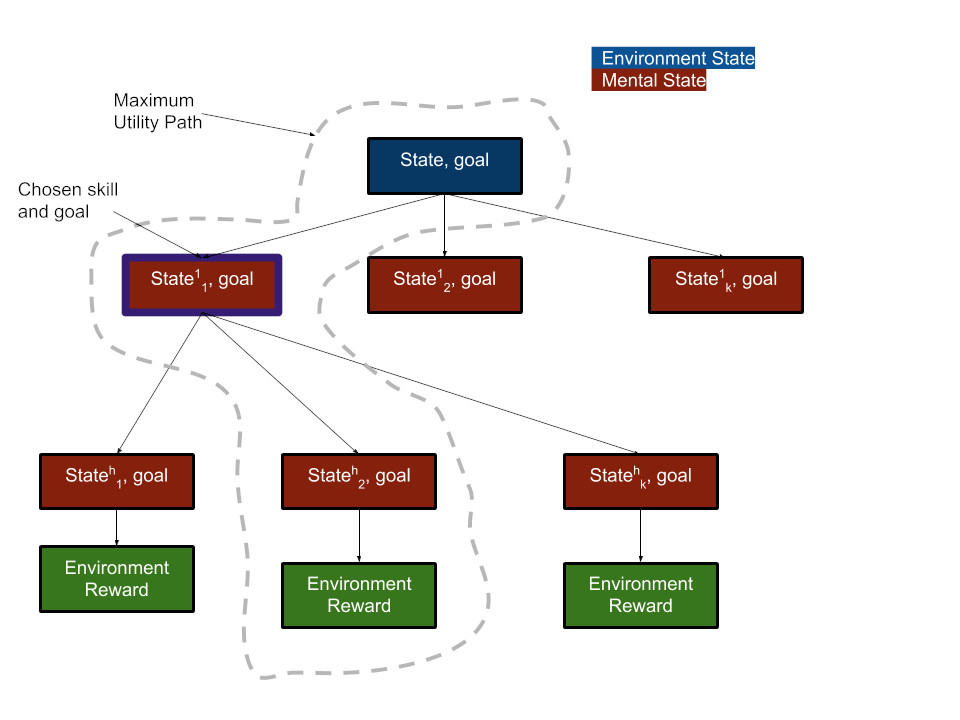

Arpit Agarwal, Katharina Muelling, Katerina Fragkiadaki.

PDF |

project page |

code

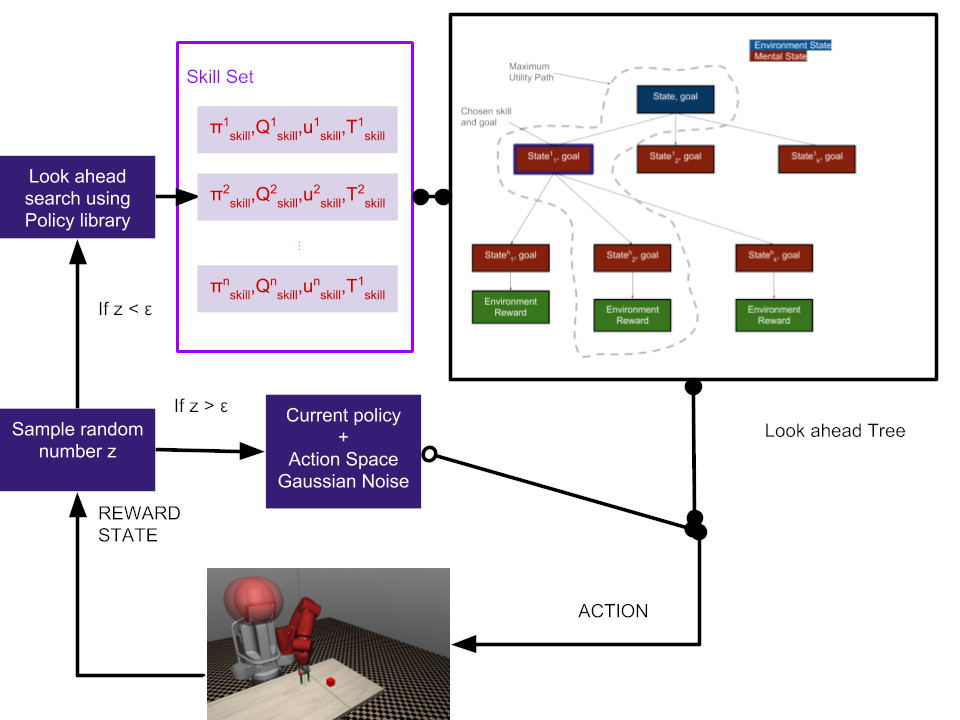

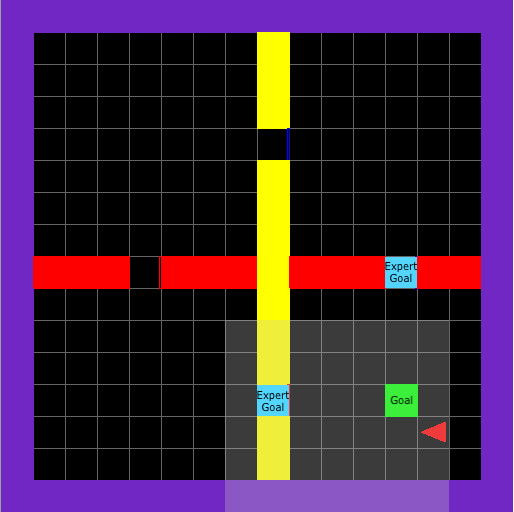

We propose an exploration method that incorporates look- ahead search over basic learnt skills and their dynamics, and use it for reinforcement learning (RL) of manipulation poli- cies . Our skills are multi-goal policies learned in isolation in simpler environments using existing multigoal RL formula- tions, analogous to options or macroactions. Coarse skill dy- namics, i.e., the state transition caused by a (complete) skill execution, are learnt and are unrolled forward during looka- head search. Policy search benefits from temporal abstrac- tion during exploration, though itself operates over low-level primitive actions, and thus the resulting policies does not suf- fer from suboptimality and inflexibility caused by coarse skill chaining. We show that the proposed exploration strategy results in effective learning of complex manipulation poli- cies faster than current state-of-the-art RL methods, and con- verges to better policies than methods that use options or parametrized skills as building blocks of the policy itself, as opposed to guiding exploration. We show that the proposed exploration strategy results in effective learning of complex manipulation policies faster than current state-of-the-art RL methods, and converges to better policies than methods that use options or parameterized skills as building blocks of the policy itself, as opposed to guiding exploration.

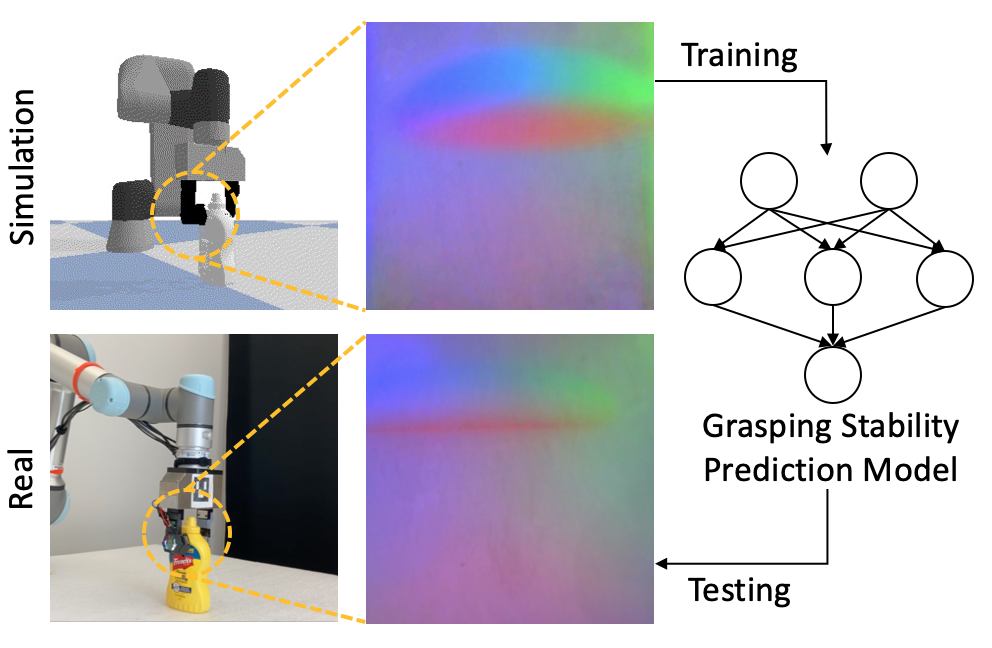

Zilin Si, Zirui Zhu, Arpit Agarwal, Wenzhen Yuan.

PDF |

project page |

code

Robot simulation has been an essential tool for data-driven manipulation tasks. However, most existing simulation frameworks lack either efficient and accurate models of physical interactions with tactile sensors or realistic tactile simulation. This makes the sim-to-real transfer for tactile-based manipulation tasks still challenging. In this work, we integrate simulation of robot dynamics and vision-based tactile sensors by modeling the physics of contact. This contact model uses simulated contact forces at the robot's end-effector to inform the generation of realistic tactile outputs. To eliminate the sim-to-real transfer gap, we calibrate our physics simulator of robot dynamics, contact model, and tactile optical simulator with real-world data, and then we demonstrate the effectiveness of our system on a zero-shot sim-to-real grasp stability prediction task where we achieve an average accuracy of 90.7% on various objects. Experiments reveal the potential of applying our simulation framework to more complicated manipulation tasks. We open-source our simulation framework at https://github.com/CMURoboTouch/Taxim/tree/taxim-robot

Reinforcement learning is a computational approach to learn from interaction. However, learning from scratch using reinforcement learning requires exorbitant number of interactions with the environment even for simple tasks. One way to alleviate the problem is to reuse previously learned skills as done by humans. This thesis provides frameworks and algorithms to build and reuse Skill Library. Firstly, we extend the Parameterized Action Space formulation using our Skill Library to multi-goal setting and show improvements in learning using hindsight at coarse level. Secondly, we use our Skill Library for exploring at a coarser level to learn the optimal policy for continuous control. We demonstrate the benefits, in terms of speed and accuracy, of the proposed approaches for a set of real world complex robotic manipulation tasks in which some state-of-the-art methods completely fail.

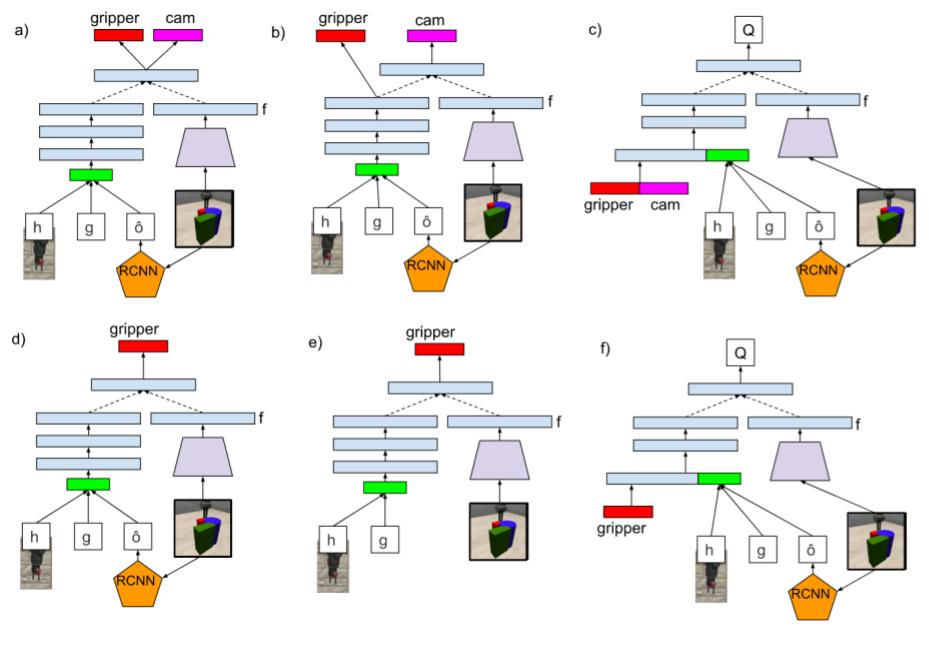

Ricson Cheng, Arpit Agarwal, Katerina Fragkiadaki.

PDF

We consider artificial agents that learn to jointly control their gripper and camera in order to reinforcement learn manipulation policies in the presence of occlusions from distractor objects. Distractors often occlude the object of in- terest and cause it to disappear from the field of view. We propose hand/eye con- trollers that learn to move the camera to keep the object within the field of view and visible, in coordination to manipulating it to achieve the desired goal, e.g., pushing it to a target location. We incorporate structural biases of object-centric attention within our actor-critic architectures, which our experiments suggest to be a key for good performance. Our results further highlight the importance of curriculum with regards to environment difficulty. The resulting active vision / manipulation policies outperform static camera setups for a variety of cluttered environments.

Arpit Agarwal

Report

Ashwin Khadke, Arpit Agarwal, Anahita Mohseni-Kabir, Devin Schwab

Report